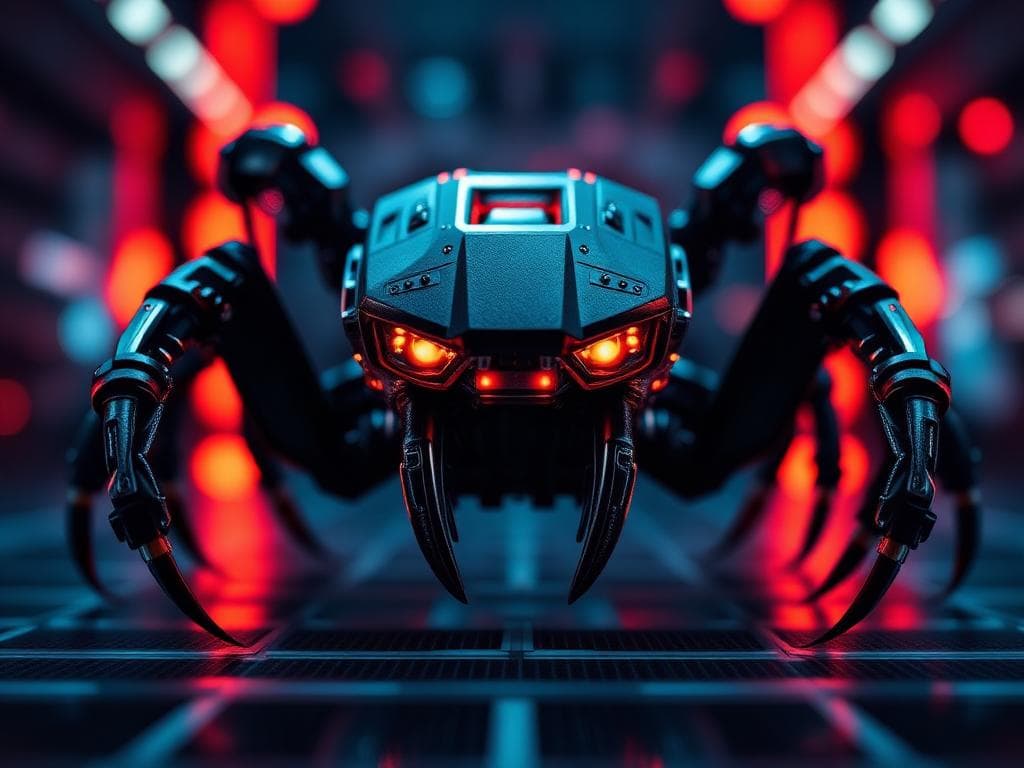

OpenClaw: A Cascade of LLMs Posing Risks to AI Development

Introduction to OpenClaw and its Architecture

OpenClaw, also known as Moltbot, is an AI system that utilizes a cascade of large language models (LLMs) to perform various tasks. This architecture, while potentially powerful, is being scrutinized by experts due to its inherent risks and potential for creating complex issues.

The system's design involves chaining multiple LLMs together, allowing it to tackle a wide range of tasks. However, this approach can lead to a cascade of errors, where a mistake in one model can be amplified by subsequent models, resulting in significant inaccuracies or undesirable outcomes.

Technical Concerns with OpenClaw's LLM Cascade

One of the primary concerns with OpenClaw is the lack of transparency and control in its LLM cascade. As the system relies on multiple models, it becomes increasingly difficult to identify the source of errors or understand the decision-making process behind its outputs.

- The complexity of the system makes it challenging to debug and maintain.

- The potential for error propagation is high, as a single mistake can be amplified throughout the cascade.

- The lack of transparency in the decision-making process can lead to trust issues and difficulties in validating the system's outputs.

Implications for AI Development and the Future of Work/Code

The development and deployment of systems like OpenClaw have significant implications for the future of AI development and the impact of AI on work and code. As AI becomes increasingly integrated into various industries, the potential risks and consequences of such systems must be carefully considered.

Experts are warning that the unchecked development of complex AI systems like OpenClaw could lead to unintended consequences, such as job displacement, increased inequality, and decreased transparency in decision-making processes.

Mitigating the Risks Associated with OpenClaw

To address the concerns surrounding OpenClaw, experts are advocating for a more cautious and transparent approach to AI development. This includes:

- Implementing robust testing and validation procedures to identify potential errors and biases.

- Developing more transparent and explainable AI models that provide insight into their decision-making processes.

- Encouraging a more nuanced understanding of the potential risks and consequences of complex AI systems.

By prioritizing transparency, control, and accountability in AI development, we can mitigate the risks associated with systems like OpenClaw and ensure that the benefits of AI are realized while minimizing its negative impacts.

Related News

X Offices Raided in France as UK Probes Grok AI

Lead in Gas Ban: Hair Analysis Reveals Success

xAI Joins Forces with SpaceX: Revolutionizing AI in Space Exploration

NanoClaw: Revolutionizing AI with Containerized Clawdbot in 500 Lines of TypeScript

Unveiling CPython Internals: A Deep Dive into Python's Core